Introduction

Amazon Redshift is one of the most widely-used services in the AWS ecosystem, and is a familiar component in many cloud architectures. In this article, we’ll cover the key facts you need to know about this cloud data warehouse, and the use cases it is best suited for. We’ll also discuss the limitations and scenarios where you might want to consider alternatives.

What is Amazon Redshift?

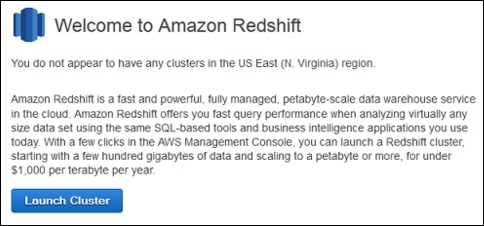

Amazon Redshift is a fully managed cloud data warehouse offered by AWS. First introduced in 2012. Today Redshift is used by thousands of customers, typically for workloads ranging from hundreds of gigabytes to petabytes of data.

Redshift is based on PostgreSQL 8.0.2 and supports standard SQL for database operations. Under the hood, various optimizations are implemented to provide fast performance even at larger data scales,. This includes massively parallel processing (MPP) and read-optimized columnar storage.

What is a Redshift Cluster?

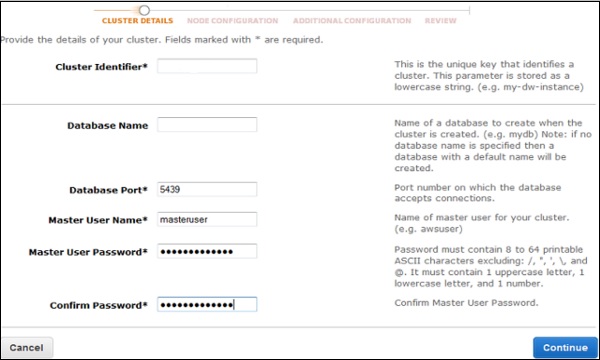

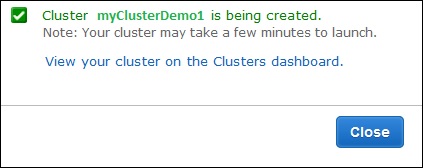

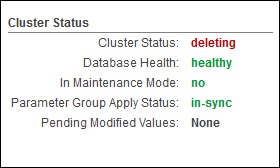

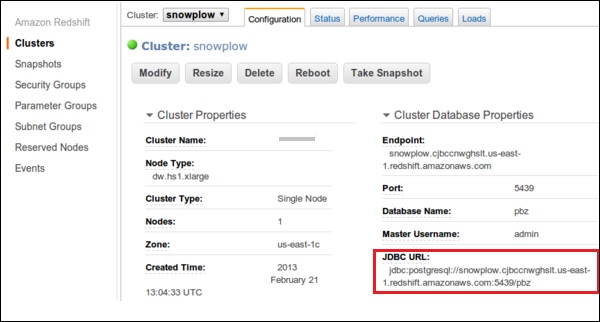

A Redshift cluster represents a group of nodes provisioned as resources for a specific data warehouse. Each cluster consists of a leader and compute nodes. When a query is executed, Redshift’s MPP design means it distributes the processing power needed to return the results of an SQL query between the available nodes. It does this automatically.

Determining cluster size depends on the amount of data stored in your database. This also depends on the number of queries being executed, and the desired performance.

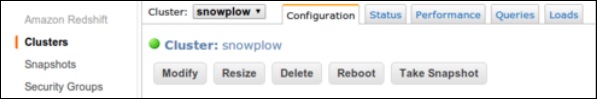

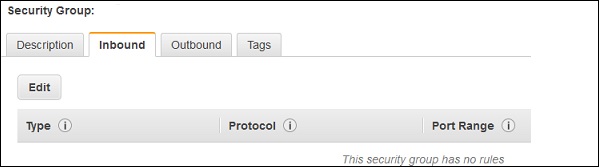

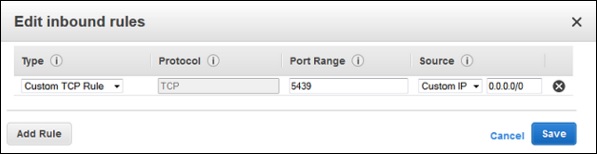

Scaling and managing clusters can be done through the Redshift console, the AWS CLI, or programmatically through the Redshift Query API.

What Makes Redshift Unique?

When Redshift was first launched, it represented a true paradigm shift from traditional data warehouses provided by the likes of Oracle and Teradata. As a fully managed service, Redshift allowed development teams to shift their focus away from infrastructure and toward core application development. The ability to add compute resources automatically with just a few clicks or lines of code, rather than having to set up and configure hardware, was revolutionary and allowed for much faster application development cycles.

Today, many modern cloud data warehouses offer similar linear scaling and infrastructure-as-a-service functionality. A few notable products including Snowflake and Google BigQuery. However, Redshift remains a very popular choice and is tightly integrated with other services in the AWS cloud ecosystem.

Amazon continues to improve Redshift, and in recent years has introduced federated query capabilities, serverless, and AQUA (hardware accelerated cache).

Redshift Use Cases

Redshift’s Postgres roots mean it is optimized for online analytical processing (OLAP) and business intelligence (BI) – typically executing complex SQL queries on large volumes of data rather than transactional processing which focuses on efficiently retrieving and manipulating a single row.

Some common use cases for Redshift include:

- Enterprise data warehouse: Even smaller organizations often work with data from multiple sources such as advertising, CRM, and customer support. Redshift can be used as a centralized repository that stores data from different sources in a unified schema and structure to create a single source of truth. This can then feed enterprise-wide reporting and analytics.

- BI and analytics: Redshift’s fast query execution against terabyte-scale data makes it an excellent choice for business intelligence use cases. Redshift is often used as the underlying database for BI tools such as Tableau (which otherwise might struggle to perform when querying or joining larger datasets).

- Embedded analytics and analytics as a service: Some organizations might choose to monetize the data they collect by exposing it to customers. Redshift’s data sharing, search, and aggregation capabilities make it viable for these scenarios, as it allows exposing only relevant subsets of data per customer while ensuring other databases, tables, or rows remain secure and private.

- Production workloads: Redshift’s performance is consistent and predictable, as long as the cluster is adequately-resourced. This makes it a popular choice for data-driven applications, which might use data for reporting or perform calculations on it.

- Change data capture and database migration: AWS Database Migration Service (DMS) can be used to replicate changes in an operational data store into Amazon Redshift. This is typically done to provide more flexible analytical capabilities, or when migrating from legacy data warehouses.

Redshift Challenges and Limitations

While Amazon Redshift is a powerful and versatile data warehouse, it still suffers from the limitations of any relational database, including:

- Costs: Because storage and compute are coupled, Redshift costs can quickly grow very high. This is especially noted when working with larger datasets, or with streaming sources such as application logs.

- Complex data ingestion: Unlike Amazon S3, Redshift does not support unstructured object storage. Data needs to be stored in tables with predefined schemas. This can often require complex ETL or ELT processes to be performed when data is written to Redshift.

- Access to historical data: Due to the above limiting factors, most organizations choose to store only a subset of raw data in Redshift, or limit the number of historical versions of the data that they retain.

- Vendor lock-in: Migrating data between relational databases is always a challenge due to the rigid schema and file formats used by each vendor. This can create significant vendor lock-in and make it difficult to use other tools to analyze or access data.

Due to these limitations, Redshift is often a less than ideal solution for use cases that require diverse access to very large volumes of data, such as exploratory data science and machine learning. In these cases, many organizatio